- Engineering Mathematics

- Discrete Mathematics

- Operating System

- Computer Networks

- Digital Logic and Design

- C Programming

- Data Structures

- Theory of Computation

- Compiler Design

- Computer Org and Architecture

- Computer Network Tutorial

Basics of Computer Network

- Basics of Computer Networking

- Introduction to basic Networking Terminology

- Goals of Networks

- Basic Characteristics of Computer Networks

- Challenges of Computer Network

- Physical Components of Computer Network

Network Hardware and Software

- Types of Computer Networks

- LAN Full Form

- How to Set Up a LAN Network?

- MAN Full Form in Computer Networking

- MAN Full Form

- WAN Full Form

- Introduction of Internetworking

- Difference between Internet, Intranet and Extranet

- Protocol Hierarchies in Computer Network

- Network Devices (Hub, Repeater, Bridge, Switch, Router, Gateways and Brouter)

- Introduction of a Router

- Introduction of Gateways

- What is a network switch, and how does it work?

Network Topology

- Types of Network Topology

- Difference between Physical and Logical Topology

- What is OSI Model? - Layers of OSI Model

- Physical Layer in OSI Model

- Data Link Layer

- Session Layer in OSI model

- Presentation Layer in OSI model

- Application Layer in OSI Model

- Protocol and Standard in Computer Networks

- Examples of Data Link Layer Protocols

- TCP/IP Model

- TCP/IP Ports and Its Applications

- What is TCP (Transmission Control Protocol)?

- TCP 3-Way Handshake Process

- Services and Segment structure in TCP

- TCP Connection Establishment

- TCP Connection Termination

- Fast Recovery Technique For Loss Recovery in TCP

- Difference Between OSI Model and TCP/IP Model

Medium Access Control

- MAC Full Form

- Channel Allocation Problem in Computer Network

- Multiple Access Protocols in Computer Network

- Carrier Sense Multiple Access (CSMA)

- Collision Detection in CSMA/CD

- Controlled Access Protocols in Computer Network

SLIDING WINDOW PROTOCOLS

- Stop and Wait ARQ

- Sliding Window Protocol | Set 3 (Selective Repeat)

- Piggybacking in Computer Networks

IP Addressing

- What is IPv4?

- What is IPv6?

- Introduction of Classful IP Addressing

- Classless Addressing in IP Addressing

- Classful Vs Classless Addressing

- Classless Inter Domain Routing (CIDR)

- Supernetting in Network Layer

- Introduction To Subnetting

- Difference between Subnetting and Supernetting

- Types of Routing

- Difference between Static and Dynamic Routing

- Unicast Routing - Link State Routing

- Distance Vector Routing (DVR) Protocol

- Fixed and Flooding Routing algorithms

- Introduction of Firewall in Computer Network

Congestion Control Algorithms

- Congestion Control in Computer Networks

- Congestion Control techniques in Computer Networks

- Computer Network | Leaky bucket algorithm

- TCP Congestion Control

Network Switching

- Circuit Switching in Computer Network

- Message switching techniques

- Packet Switching and Delays in Computer Network

- Differences Between Virtual Circuits and Datagram Networks

Application Layer:DNS

- Domain Name System (DNS) in Application Layer

- Details on DNS

- Introduction to Electronic Mail

- E-Mail Format

- World Wide Web (WWW)

- HTTP Full Form

- Streaming Stored Video

- What is a Content Distribution Network and how does it work?

CN Interview Quetions

- Top 50 Plus Networking Interview Questions and Answers for 2024

- Top 50 TCP/IP Interview Questions and Answers 2024

- Top 50 IP Addressing Interview Questions and Answers

- Last Minute Notes - Computer Networks

- Computer Network - Cheat Sheet

- Network Layer

- Transport Layer

- Application Layer

TCP uses several timers to ensure that excessive delays are not encountered during communications. Several of these timers are elegant, handling problems that are not immediately obvious at first analysis. Each of the timers used by TCP is examined in the following sections, which reveal its role in ensuring data is properly sent from one connection to another.

TCP implementation uses four timers –

- Measured RTT(RTTm) – The measured round-trip time for a segment is the time required for the segment to reach the destination and be acknowledged, although the acknowledgement may include other segments.

- Smoothed RTT(RTTs) – It is the weighted average of RTTm. RTTm is likely to change and its fluctuation is so high that a single measurement cannot be used to calculate RTO.

- Deviated RTT(RTTd) – Most implementations do not use RTTs alone so RTT deviated is also calculated to find out RTO.

- Persistent Timer – To deal with a zero-window-size deadlock situation, TCP uses a persistence timer. When the sending TCP receives an acknowledgment with a window size of zero, it starts a persistence timer. When the persistence timer goes off, the sending TCP sends a special segment called a probe. This segment contains only 1 byte of new data. It has a sequence number, but its sequence number is never acknowledged; it is even ignored in calculating the sequence number for the rest of the data. The probe causes the receiving TCP to resend the acknowledgment which was lost.

- Keep Alive Timer – A keepalive timer is used to prevent a long idle connection between two TCPs. If a client opens a TCP connection to a server transfers some data and becomes silent the client will crash. In this case, the connection remains open forever. So a keepalive timer is used. Each time the server hears from a client, it resets this timer. The time-out is usually 2 hours. If the server does not hear from the client after 2 hours, it sends a probe segment. If there is no response after 10 probes, each of which is 75 s apart, it assumes that the client is down and terminates the connection.

After a TCP connection is closed, it is possible for datagrams that are still making their way through the network to attempt to access the closed port. The quiet timer is intended to prevent the just-closed port from reopening again quickly and receiving these last datagrams.

Reference – TCP Timers – Que10

Please Login to comment...

Similar reads, improve your coding skills with practice.

What kind of Experience do you want to share?

Interactive end-of-chapter exercises

Supplement to Computer Networking: A Top Down Approach 8th Edition

"Tell me and I forget. Show me and I remember. Involve me and I understand." Chinese proverb

Computing TCP's RTT and timeout values

Question List

1. What is the estimatedRTT after the first RTT? 2. What is the RTT Deviation for the the first RTT? 3. What is the TCP timeout for the first RTT? 4. What is the estimatedRTT after the second RTT? 5. What is the RTT Deviation for the the second RTT? 6. What is the TCP timeout for the second RTT? 7. What is the estimatedRTT after the third RTT? 8. What is the RTT Deviation for the the third RTT? 9. What is the TCP timeout for the third RTT?

DevRTT is calculated with the following equation: (1-beta)*DevRTT + beta * |estimatedRTT - sampleRTT| estimatedRTT is calculated with the following equation: (1-alpha)*estimatedRTT + alpha*sampleRTT TCP timeout is calculated with the following equation: estimatedRTT + (4*DevRTT) 1. The estimatedRTT for RTT1 is 353.75 2. The DevRTT for RTT1 is 27.75 3. The timeout for RTT1 is 464.75 4. The estimatedRTT for RTT2 is 353.28 5. The DevRTT for RTT2 is 21.75 6. The timeout for RTT2 is 440.28 7. The estimatedRTT for RTT3 is 344.12 8. The DevRTT for RTT3 is 34.63 9. The timeout for RTT3 is 482.65

That's incorrect

That's correct

The answer was: 353.75

Question 1 of 9

The answer was: 27.75

Question 2 of 9

The answer was: 464.75

Question 3 of 9

The answer was: 353.28

Question 4 of 9

The answer was: 21.75

Question 5 of 9

The answer was: 440.28

Question 6 of 9

The answer was: 344.12

Question 7 of 9

The answer was: 34.63

Question 8 of 9

The answer was: 482.65

Question 9 of 9

We gratefully acknowledge the programming and problem design work of John Broderick (UMass '21), which has really helped to substantially improve this site.

Copyright © 2010-2022 J.F. Kurose, K.W. Ross Comments welcome and appreciated: [email protected]

Peter Manton :: Tech Notes

Thursday 25 august 2016, tcp: rtt (round trip times) and rto (retransmission timeouts).

0 comments:

Post a comment.

Determining TCP Initial Round Trip Time

- TCP Analysis , Wireshark

- 18 Comments

I was sitting in the back in Landis TCP Reassembly talk at Sharkfest 2014 (working on my slides for my next talk) when at the end one of the attendees approached me and asked me to explain determining TCP initial RTT to him again. I asked him for a piece of paper and a pen, and coached him through the process. This is what I did.

What is the Round Trip Time?

The round trip time is an important factor when determining application performance if there are many request/reply pairs being sent one after another, because each time the packets have to travel back and forth, adding delay until results are final. This applies mostly to database and remote desktop applications, but not that much for file transfers.

What is Initial RTT, and why bother?

Initial RTT is the round trip time that is determined by looking at the TCP Three Way Handshake. It is good to know the base latency of the connection, and the packets of the handshake are very small. This means that they have a good chance of getting through at maximum speed, because larger packets are often buffered somewhere before being passed on to the next hop. Another point is that the handshake packets are handled by the TCP stack of the operating system, so there is no application interference/delay at all. As a bonus, each TCP session starts with these packets, so they’re easy to find (if the capture was started early enough to catch it, of course).

Knowing Initial RTT is necessary to calculate the optimum TCP window size of a connection, in case it is performing poorly due to bad window sizes. It is also important to know when analyzing packet loss and out of order packets, because it helps to determine if the sender could even have known about packet loss. Otherwise a packet marked as retransmission could just be an out of order arrival.

Determining Initial RTT

The problem with capture device placement

One of the rules of creating good captures is that you should never capture on the client or the server . But if the capture is taken somewhere between client and server we have a problem: how do we determine Initial RTT? Take a look at the next diagram and the solution should be obvious:

Instead of just looking at SYN to SYN/ACK or SYN/ACK to ACK we always look at all three TCP handshake packets. The capture device only sees that SYN after it has already traveled the distance from the client to the capture spot. Same for the ACK from the server, and the colored lines tells us that by looking at all three packets we have full RTT if we add the timings.

Frequently asked questions

Question: can I also look at Ping packets (ICMP Echo Request/Reply)? Answer: no, unless you captured on the client sending the ping. Take a look at the dual colored graph again – if the capture device is in the middle you’re not going to be able to determine the full RTT from partial request and reply timings.

Discussions — 18 Responses

Good article. I’ll have to point a few friends here for reference.

Just wanted to mention that I’ve seen intermediate devices like load balancers or proxies that immediately respond to the connection request throw people off. Make sure you know what’s in your path and always double/triple check the clients..

Thanks, Ty. I agree, you have to know what’s in the path between client and server. Devices sitting in the middle accepting the client connection and open another connection towards the server will lead to partial results, so you have to be aware of them. Good point. They’re usually simple to spot though – if you have an outgoing connection where you know that the packets are leaving your LAN and you get Initial RTT of less than a millisecond it’s a strong hint for a proxy (or similar device).

I really liked your article. I have one question:

Lets say we have the below deployment:

Client ——> LoadBalancer ——> Proxy ——–> Internet/Server

In case we “terminate” the TCP connection on the Proxy and we establish a new TCP connection from the Proxy to the Server. Should we measure 2 RTTs one for each connection and the aggregation of them is the final RTT ? What should we measure in order to determine the speed in such deployments ?

Regards, Andreas

Proxies can be difficult, because there is often only one connection per client but many outgoing connections to various servers. It usually complicates analysis of performance issues because you have to find and match requests first.

Luckily, for TCP analysis you only need to care about the RTT of each connection, because packet loss and delays are only relevant to those.

Of course, if you’re interested in the total RTT you can can add the 2 partial RTTs to see where the time is spent.

Thanks a lot for your response 🙂

good article

Thanks for mentioning REF, which is great of heltp

so where we need to capture the tcp packet actually to know the RTT? for ex if its like :

server—-newyork—-chicago—-california—–washington—-server and if i need to know the RTT between server in newyork to washington Please let me know

You can capture anywhere between the two servers and read the RTT between them from SYN to ACK. By reading the time between the first and third handshake packet it doesn’t matter where you capture, which is the beauty of it. But keep in mind that the TCP connection needs to be end-to-end, so if anything is proxying the connection you have to capture at least twice, on each side of the proxying device.

How can find packet travelling time from client to server are on different system.

To determine the round trip time you always need packets that are exchanged between the two systems. So if I understand your question correctly and you don’t have access to packets of a communication between the client and the server you can’t determine iRTT.

Can u please tell how to solve these type of problems 2. If originally RTTs = 14 ms and a is set to 0.2, calculate the new RTT s after the following events (times are relative to event 1): 1. Event 1: 00 ms Segment 1 was sent. 2. Event 2: 06 ms Segment 2 was sent. 3. Event 3: 16 ms Segment 1 was timed-out and resent. 4. Event 4: 21 ms Segment 1 was acknowledged. 5. Event 5: 23 ms Segment 2 was acknowledged

I’m sorry, but I’m not exactly sure what this is supposed to be. What is it good for, and what is this a set to 0.2? The only thing that may make sense is measuring the time it took to ACK each segment (1 -> 21ms if measuring the time from the original segment, 5 otherwise, 2 -> 17ms), but I have no idea what to use “a” for 🙂

how to calculate RTT using NS-2(network simulator-2)?

I haven’t played with NS-2 yet, but if you can capture packets it should be the same procedure.

ok..thank you sir

Hi, Subject: iRTT Field Missing in Wireshark Capture

We have two Ethernet boards, one board perfectly accomplishes an FTP file transfer the second does not and it eventually times out early on, mishandling the SYN SYN:ACK phase. We analyzed the two Wireshark captures and noticed that the good capture has the iRTT time stamp field included in the dump while the bad one does not. What is the significance of not seeing that iRTT in the bad capture? Is that indicative to why it fails the xfer?

The iRTT field can only be present if the TCP handshake was complete, meaning you all three packets in the capture (SYN, SYN/ACK, ACK). If one is missing the iRTT can’t be calculated correctly, and the field won’t be present. So if the field is missing it means that the TCP connection wasn’t established, which also means that no data transfer was possible.

Cancel reply

CAPTCHA Code *

- Jasper’s Colorfilters

- Sharkfest 2017 Hands-On Files

- Sharkfest 2019 EU Packet Challenge

- Sharkfest 2019 US Packet Challenge

- The Network Packet Capture Playbook

Recent Comments

- SV on Analyzing a failed TLS connection

- Jasper on Wireshark Column Setup Deepdive

- Jasper on Wireless Capture on Windows

- Ram on Wireshark Column Setup Deepdive

- PacketSnooper on Wireless Capture on Windows

Recent Posts

- DDoS Tracefile for SharkFest Europe 2021

- Introducing DNS Hammer, Part 2: Auditing a Name Server’s Rate Limiting Configuration

- Introducing DNS Hammer, Part 1: DDoS Analysis – From DNS Reflection to Rate Limiting

- Analyzing a failed TLS connection

- Patch! Patch! Patch!

- Entries feed

- Comments feed

- WordPress.org

Rechtliches

- Erklärung zur Informationspflicht (Datenschutzerklärung)

- Impressum und ViSdP

TCP/IP: TCP Timeout and Retransmission

Jan 17, 2023

To decide what data it needs to resend, TCP depends on a continuous flow of acknowledgments from receiver to sender.

When data segments or acknowledgments are lost, TCP initiates a retransmission of the data that has not been acknowledged.

TCP has two separate mechanisms for accomplishing retransmission, one based on time and one based on the structure of the acknowledgments.

TCP sets a timer when it sends data, and if the data is not acknowledged when the timer expires, a timeout or timer-based retransmission of data occurs.

The timeout occurs after an interval called the retransmission timeout (RTO).

It has another way of initiating a retransmission called fast retransmission or fast retransmit , which usually happens without any delay.

Fast retransmit is based on inferring losses by noticing

when TCP’s cumulative acknowledgment fails to advance in the (duplicate) ACKs received over time, or

when ACKs carrying selective acknowledgment information (SACKs) indicate that out-of-order segments are present at the receiver.

1. Simple Timeout and Retransmission (Time-based) Example

2.1. the classic method, 2.2. the standard method, 3. timer-based retransmission, 4. fast retransmit, 5.1. sack receiver behavior, 5.2. sack sender behavior, 5.3. example, 6.1. duplicate sack (dsack) extension, 6.2. the eifel detection algorithm, 6.3. forward-rto recovery (f-rto), 6.4. the eifel response algorithm, 7.1. reordering, 7.2. duplication, 8. destination metrics, 9. repacketization, a.1. dropping packets with tc, a.2. dropping packets with iptables.

We will establish a connection, send some data to verify that everything is OK, isolate one end of the connection, send some more data, and watch what TCP does.

Logically, TCP has two thresholds R1 and R2 to determine how persistently it will attempt to resend the same segment [RFC1122] .

Threshold R1 indicates the number of tries TCP will make (or the amount of time it will wait) to resend a segment before passing negative advice to the IP layer (e.g., causing it to reevaluate the IP route it is using).

Threshold R2 (larger than R1 ) dictates the point at which TCP should abandon the connection.

R1 and R2 might be measured in time units or as a count of retransmissions.

The value of R1 SHOULD correspond to at least 3 retransmissions, at the current RTO.

The value of R2 SHOULD correspond to at least 100 seconds.

However, the values of R1 and R2 may be different for SYN and data segments.

In particular, R2 for a SYN segment MUST be set large enough to provide retransmission of the segment for at least 3 minutes.

In Linux, the R1 and R2 values for regular data segments are available to be changed by applications or can be changed using the system-wide configuration variables net.ipv4.tcp_retries1 and net.ipv4.tcp_retries2 , respectively.

These are measured in the number of retransmissions, and not in units of time.

The default value for net.ipv4.tcp_retries2 is 15, which corresponds roughly to 13–30 minutes, depending on the connection’s RTO.

The default value for net.ipv4.tcp_retries1 is 3.

For SYN segments, net.ipv4.tcp_syn_retries and net.ipv4.tcp_synack_retries bounds the number of retransmissions of SYN segments; their default value is 5 (roughly 180s).

2. Setting the Retransmission Timeout (RTO)

Fundamental to TCP’s timeout and retransmission procedures is how to set the RTO based upon measurement of the RTT experienced on a given connection.

If TCP retransmits a segment earlier than the RTT, it may be injecting duplicate traffic into the network unnecessarily.

Conversely, if it delays sending until much longer than one RTT, the overall network utilization (and single-connection throughput) drops when traffic is lost.

Knowing the RTT is made more complicated because it can change over time, as routes and network usage vary.

Because TCP sends acknowledgments when it receives data, it is possible to send a byte with a particular sequence number and measure the time (called an RTT sample ) required to receive an acknowledgment that covers that sequence number.

The challenge for TCP is to establish a good estimate for the range of RTT values given a set of samples that vary over time and set the RTO based on these values.

The RTT is estimated for each TCP connection separately, and one retransmission timer is pending whenever any data is in flight that consumes a sequence number (including SYN and FIN segments).

The original TCP specification [RFC0793] had TCP update a smoothed RTT estimator (called SRTT ) using the following formula:

SRTT ← α( SRTT ) + (1 − α) RTT s

Here, SRTT is updated based on both its existing value and a new sample, RTT s .

The constant α is a smoothing or scale factor with a recommended value between 0.8 and 0.9.

SRTT is updated every time a new measurement is made.

With the original recommended value for α , it is clear that 80% to 90% of each new estimate is from the previous estimate and 10% to 20% is from the new measurement.

This type of average is also known as an exponentially weighted moving average (EWMA) or low-pass filter.

It is convenient for implementation reasons because it requires only one previous value of SRTT to be stored in order to keep the running estimate.

Given the estimator SRTT , which changes as the RTT changes, [RFC0793] recommended that the RTO be set to the following:

RTO = min( ubound , max( lbound ,( SRTT )β))

where β is a delay variance factor with a recommended value of 1.3 to 2.0,

ubound is an upper bound (suggested to be, e.g., 1 minute),

and lbound is a lower bound (suggested to be, e.g., 1s) on the RTO.

We shall call this assignment procedure the classic method . It generally results in the RTO being set either to 1s, or to about twice SRTT .

For relatively stable distributions of the RTT, this was adequate. However, when TCP was run over networks with highly variable RTT s (e.g., early packet radio networks in this case), it did not perform so well.

In [J88] , Jacobson detailed problems with the classic method further—basically, that the timer specified by [RFC0793] cannot keep up with wide fluctuations in the RTT (and in particular, it causes unnecessary retransmissions when the real RTT is much larger than expected).

To address this problem, the method used to assign the RTO was enhanced to accommodate a larger variability in the RTT.

This is accomplished by keeping track of an estimate of the variability in the RTT measurements in addition to the estimate of its average .

Setting the RTO based on both a mean and a variability estimator provides a better timeout response to wide fluctuations in the roundtrip times than just calculating the RTO as a constant multiple of the mean.

If we think of the RTT measurements made by TCP as samples of a statistical process, estimating both the mean and variance (or standard deviation) helps to make better predictions about the possible future values the process may take on.

A good prediction for the range of possible values for the RTT helps TCP determine an RTO that is neither too large nor too small in most cases.

The following equations that are applied to each RTT measurement M (called RTT s earlier):

srtt ← (1 - g)( srtt ) + (g) M

rttvar ← (1 - h)( rttvar ) + (h)(| M - srtt |)

RTO = srtt + 4( rttvar )

Here, the value srtt effectively replaces the earlier value of SRTT , and the value rttvar , which becomes an EWMA of the mean deviation , is used instead of β to help determine the RTO.

This is the basis for the way many TCP implementations compute their RTOs to this day, and because of its adoption as the basis for [RFC6298] we shall call it the standard method , although there are slight refinements in [RFC6298] .

Once a sending TCP has established its RTO based upon measurements of the time-varying values of effective RTT, whenever it sends a segment it ensures that a retransmission timer is set appropriately.

When setting a retransmission timer, the sequence number of the so-called timed segment is recorded, and if an ACK is received in time, the retransmission timer is canceled.

The next time the sender emits a packet with data in it, a new retransmission timer is set, the old one is canceled, and the new sequence number is recorded.

The sending TCP therefore continuously sets and cancels one retransmission timer per connection; if no data is ever lost, no retransmission timer ever expires.

When TCP fails to receive an ACK for a segment it has timed on a connection within the RTO, it performs a timer-based retransmission.

TCP considers a timer-based retransmission as a fairly major event; it reacts very cautiously when it happens by quickly reducing the rate at which it sends data into the network. It does this in two ways.

The first way is to reduce its sending window size based on congestion control procedures.

The other way is to keep increasing a multiplicative backoff factor applied to the RTO each time a retransmitted segment is again retransmitted.

In particular, the RTO value is (temporarily) multiplied by the value γ to form the backed-off timeout when multiple retransmissions of the same segment occur:

In ordinary circumstances, γ has the value 1.

On subsequent retransmissions, γ is doubled: 2, 4, 8, and so forth.

There is typically a maximum backoff factor that γ is not allowed to exceed (Linux ensures that the used RTO never exceeds the value TCP_RTO_MAX , which defaults to 120s).

Once an acceptable ACK is received, γ is reset to 1.

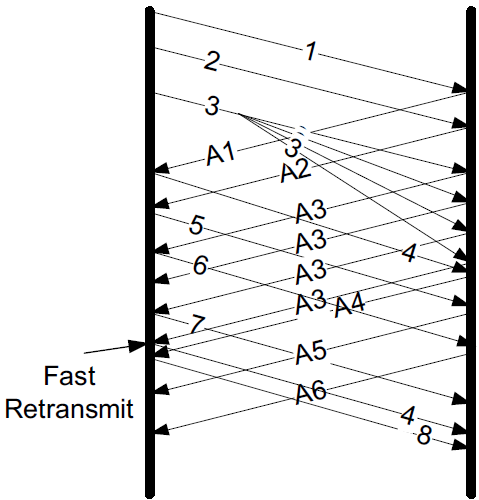

Fast retransmit [RFC5681] is a TCP procedure that can induce a packet retransmission based on feedback from the receiver instead of requiring a retransmission timer to expire.

TCP generates an immediate acknowledgment (a duplicate ACK ) when an out-of-order segment is received, and that the loss of a segment implies out-of-order arrivals at the receiver when subsequent data arrives.

When this happens, a hole is created at the receiver.

The sender’s job then becomes filling the receiver’s holes as quickly and efficiently as possible.

The duplicate ACKs sent immediately when out-of-order data arrives are not delayed.

The reason is to let the sender know that a segment was received out of order, and to indicate what sequence number is expected (i.e., where the hole is).

When SACK is used, these duplicate ACKs typically contain SACK blocks as well, which can provide information about more than one hole.

A duplicate ACK (with or without SACK blocks) arriving at a sender is a potential indicator that a packet sent earlier has been lost.

It can also appear when there is packet reordering in the network—if a receiver receives a packet for a sequence number beyond the one it is expecting next, the expected packet could be either missing or merely delayed.

TCP waits for a small number of duplicate ACKs (called the duplicate ACK threshold or dupthresh ) to be received before concluding that a packet has been lost and initiating a fast retransmit.

Traditionally, dupthresh has been a constant (with value 3 [RFC5681] ), but some nonstandard implementations (including Linux) alter this value based on the current measured level of reordering.

A TCP sender observing at least dupthresh duplicate ACKs retransmits one or more packets that appear to be missing without waiting for a retransmission timer to expire. It may also send additional data that has not yet been sent.

Packet loss inferred by the presence of duplicate ACKs is assumed to be related to network congestion , and congestion control procedures are invoked along with fast retransmit .

Without SACK, no more than one segment is typically retransmitted until an acceptable ACK is received.

With SACK, ACKs contain additional information allowing the sender to fill more than one hole in the receiver per RTT.

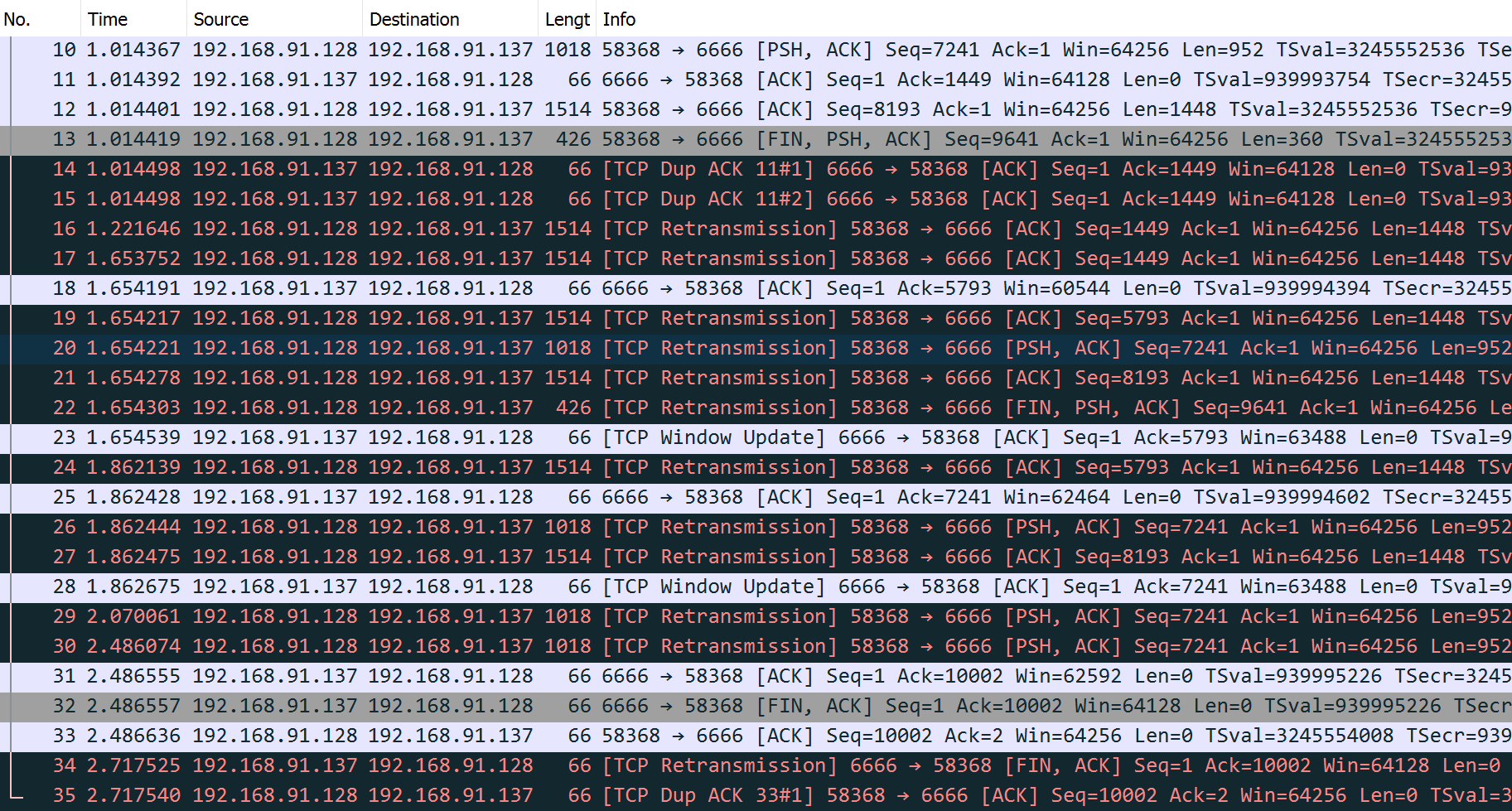

The packets 23 and 28 are window update ACKs with a duplicate sequence number (because no data is being carried) but contains a change to the TCP flow control window. The window changes from 65,160 bytes to 63,488 bytes.

Thus, it is not counted toward the three-duplicate-ACK threshold required to initiate a fast retransmit.

Window updates merely provide a copy of the window advertisement.

The packets 14 and 15 are all duplicate ACKs for sequence number 1449.

The arrival of the second of these duplicate ACKs triggers the fast retransmit of segment 1449 by packets 16 and 17.

The retransmissions from packet 19 to 22 are somewhat different from the first two.

When the first two retransmissions takes place, the sending TCP notes the highest sequence number (called the recovery point ) it had sent just before it performed the retransmission (9641 + 360 = 10001).

TCP is considered to be recovering from loss after a retransmission until it receives an ACK that matches or exceeds the sequence number of the recovery point.

In this example, the ACKs at packet 18 are not for 10001, but instead for 5793.

This number is larger than the previous highest ACK value seen (1449), but not enough to meet or exceed the recovery point (10001).

This type of ACK is called a partial ACK for this reason.

When partial ACKs arrive, the sending TCP immediately sends the segments that appears to be missing (5793 to 9641 in this case) and continues this way until the recovery point is matched or exceeded by an arriving ACK.

If permitted by congestion control procedures, it may also send new data it has not yet sent.

Because no SACKs are being used, the sender can learn of at most one receiver hole per round-trip time, indicated by the increase in the ACK number of returning packets, which can only occur once a retransmission filling the receiver’s lowest-numbered hole has been received and ACKed.

5. Retransmission with Selective Acknowledgments

With the standardization of the Selective Acknowledgment options in [RFC2018] , a SACK-capable TCP receiver is able to describe data it has received with sequence numbers beyond the cumulative ACK Number field it sends in the primary portion of the TCP header.

The gaps between the ACK number and other in-window data cached at the receiver are called holes .

Data with sequence numbers beyond the holes are called out-of-sequence data because that data is not contiguous, in terms of its sequence numbers, with the other data the receiver has already received.

The job of a sending TCP is to fill the holes in the receiver by retransmitting any data the receiver is missing, yet to be as efficient as possible by not resending data the receiver already has.

In many circumstances, the properly operating SACK sender is able to fill these holes more quickly and with fewer unnecessary retransmissions than a comparable non-SACK sender because it does not have to wait an entire RTT to learn about additional holes.

When the SACK option is being used, an ACK can be augmented with up to three or four SACK blocks that contain information about out-of-sequence data at the receiver.

Each SACK block contains two 32-bit sequence numbers representing the first and last sequence numbers (plus 1) of a continuous block of out-of-sequence data being held at the receiver.

A SACK option that specifies n blocks has a length of 8n + 2 bytes (8n bytes for the sequence numbers and 2 to indicate the option kind and length), so the 40 bytes available to hold TCP options can specify a maximum of four blocks.

It is expected that SACK will often be used in conjunction with the TSOPT, which takes an additional 10 bytes (plus 2 bytes of padding), meaning that SACK is typically able to include only three blocks per ACK.

With three distinct blocks, up to three holes can be reported to the sender.

If not limited by congestion control , all three could be filled within one round-trip time using a SACK-capable sender.

An ACK packet containing one or more SACK blocks is sometimes called simply a SACK .

A SACK-capable receiver is allowed to generate SACKs if it has received the SACK-Permitted option during the TCP connection establishment.

Generally speaking, a receiver generates SACKs whenever there is any out-of-order data in its buffer. This can happen either:

because data was lost in transit, or

because it has been reordered and newer data has arrived at the receiver before older data.

The receiver places in the first SACK block the sequence number range contained in the segment it has most recently received .

Because the space in a SACK option is limited, it is best to ensure that the most recent information is always provided to the sending TCP, if possible.

Other SACK blocks are listed in the order in which they appeared as first blocks in previous SACK options.

That is, they are filled in by repeating the most recently sent SACK blocks (in other segments) that are not subsets of another block about to be placed in the option being constructed.

The purpose of including more than one SACK block in a SACK option and repeating these blocks across multiple SACKs is to provide some redundancy in the case where SACKs are lost.

If SACKs were never lost, [RFC2018] points out that only one SACK block would be required per SACK for full SACK functionality.

Unfortunately, SACKs and regular ACKs are sometimes lost and are not retransmitted by TCP unless they contain data (or the SYN or FIN control bit fields are turned on).

A SACK-capable sender must be used that treats the SACK blocks appropriately and performs selective retransmission by sending only those segments missing at the receiver, a process also called selective repeat .

The SACK sender keeps track of any cumulative ACK information it receives (like any TCP sender), plus any SACK information it receives.

When a SACK-capable sender has the opportunity to perform a retransmission, usually because it has received a SACK or seen multiple duplicate ACKs, it has the choice of whether it sends new data or retransmits old data.

The SACK information provides the sequence number ranges present at the receiver, so the sender can infer what segments likely need to be retransmitted to fill the receiver’s holes.

The simplest approach is to have the sender first fill the holes at the receiver and then move on to send more new data [RFC3517] if the congestion control procedures allow. This is the most common approach.

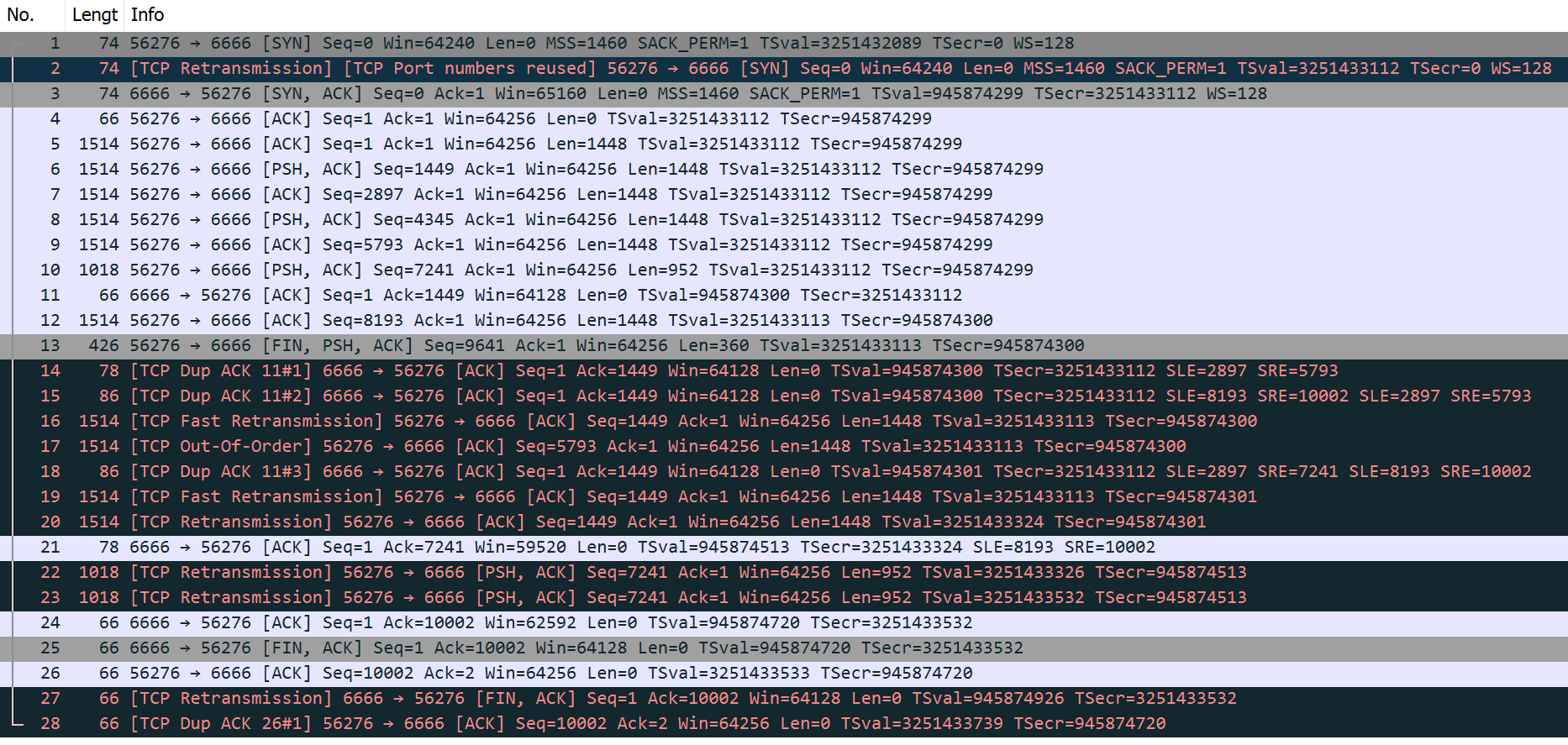

To understand how the use of SACK alters the sender and receiver behaviors, we repeat the preceding fast retransmit experiment, but this time the sender and receiver are using SACK.

The SYN packet from the sender, the first packet of the trace, also contains an identical option.

These options are present only at connection setup, and thus they only ever appear in segments with the SYN bit field set.

Once the connection is permitted to use SACKs, packet loss generally causes the receiver to start producing SACKs.

The ACK at packet 14 for 1449 contains a SACK block of [2897:5793], indicating a hole at the receiver.

The receiver is missing the sequence number range [1449,2896], which corresponds to the single 1448-byte packet starting with sequence number 1449.

The SACK arriving at packet 15 contains two SACK blocks: [8193:10002] and [2897:5793].

Recall that the first SACK blocks from previous SACKs are repeated in later positions in subsequent SACKs for robustness against ACK loss.

This SACK is a duplicate ACK for sequence number 1449 and suggests that the receiver now requires the missing segments starting with sequence numbers 1449 and 5793.

The SACK sender has not had to wait an RTT to retransmit lost segment 5793 after retransmitting segment 1449.

6. Spurious Timeouts and Retransmissions

Under a number of circumstances, TCP may initiate a retransmission even when no data has been lost.

Such undesirable retransmissions are called spurious retransmissions and are caused by spurious timeouts (timeouts firing too early) and other reasons such as packet reordering , packet duplication , or lost ACKs .

Spurious timeouts can occur when the real RTT has recently increased significantly, beyond the RTO. This happens more frequently in environments where lower-layer protocols have widely varying performance (e.g., wireless).

A number of approaches have been suggested to deal with spurious timeouts. They generally involve a detection algorithm and a response algorithm .

The detection algorithm attempts to determine whether a timeout or timer-based retransmission was spurious.

The response algorithm is invoked once a timeout or retransmission is deemed spurious.

Its purpose is to undo or mitigate some action that is otherwise normally performed by TCP when a retransmission timer expires.

With a non-SACK TCP, an ACK can indicate only the highest in-sequence segment back to the sender. With SACK, it can signal other (out-of-order) segments as well.

DSACK or D-SACK (stands for duplicate SACK [RFC2883] ) is a rule, applied at the SACK receiver and interoperable with conventional SACK senders, that causes the first SACK block to indicate the sequence numbers of a duplicate segment that has arrived at the receiver , which is usually to determine when a retransmission was not necessary and to learn additional facts about the network.

The change to the SACK receiver is to allow a SACK block to be included even if it covers sequence numbers below (or equal to) the cumulative ACK Number field.

It applies equally well in cases where the DSACK information is above the cumulative ACK Number field; this happens for duplicated out-of-order segments.

DSACK information is included in only a single ACK, and such an ACK is called a DSACK .

DSACK information is not repeated across multiple SACKs as conventional SACK information is.

Exactly what a sender given DSACK information is supposed to do with it is not specified by [RFC2883] .

The experimental Eifel Detection Algorithm [RFC3522] deals with the retransmission ambiguity problem using the TCP TSOPT to detect spurious retransmissions.

After a retransmission timeout occurs, Eifel awaits the next acceptable ACK.

If the next acceptable ACK indicates that the first copy of a retransmitted packet (called the original transmit ) was the cause for the ACK, the retransmission is considered to be spurious.

The Eifel Detection Algorithm is able to detect spurious behavior earlier than the approach using only DSACK because it relies on ACKs generated as a result of packets arriving before loss recovery is initiated.

DSACKs, conversely, are able to be sent only after a duplicate segment has arrived at the receiver and able to be acted upon only after the DSACK is returned to the sender.

Detecting spurious retransmissions early can offer advantages, because it allows the sender to avoid most of the go-back-N behavior.

The mechanics of the Eifel Detection Algorithm are simple. It requires the use of the TCP TSOPT.

When a retransmission is sent (either a timer-based retransmission or a fast retransmit), the TSV value is stored.

When the first acceptable ACK covering its sequence number is received, the incoming ACK’s TSER is examined.

If it is smaller than the stored value, the ACK corresponds to the original transmission of the packet and not the retransmission, implying that the retransmission must have been spurious.

This approach is fairly robust to ACK loss as well.

If an ACK is lost, any subsequent ACKs still have TSER values less than the stored TSV of the retransmitted segment.

Thus, a retransmission can be deemed spurious as a result of any of the window’s worth of ACKs arriving, so a loss of any single ACK is not likely to cause a problem.

The Eifel Detection Algorithm can be combined with DSACKs which can be beneficial when an entire window’s worth of ACKs are lost but both the original transmit and retransmission have arrived at the receiver.

In this particular case, the arriving retransmit causes a DSACK to be generated.

The Eifel Detection Algorithm would by default conclude that the retransmission is spurious.

It is thought, however, that if so many ACKs are being lost, allowing TCP to believe the retransmission was not spurious is useful (e.g., to induce it to start sending more slowly—a consequence of the congestion control procedures).

Thus, arriving DSACKs cause the Eifel Detection Algorithm to conclude that the corresponding retransmission is not spurious.

Forward-RTO Recovery (F-RTO) [RFC5682] is a standard algorithm for detecting spurious retransmissions.

It does not require any TCP options, so when it is implemented in a sender, it can be used effectively even with an older receiver that does not support the TCP TSOPT.

It attempts to detect only spurious retransmissions caused by expiration of the retransmission timer; it does not deal with the other causes for spurious retransmissions or duplications mentioned before.

F-RTO makes a modification to the action TCP ordinarily takes after a timer-based retransmission.

These retransmissions are for the smallest sequence number for which no ACK has yet been received.

Ordinarily, TCP continues sending additional adjacent packets in order as additional ACKs arrive. This is the go-back-N behavior.

F-RTO modifies the ordinary behavior of TCP by having TCP send new (so far unsent) data after the timeout-based retransmission when the first ACK arrives. It then inspects the second arriving ACK.

If either of the first two ACKs arriving after the retransmission was sent are duplicate ACKs, the retransmission is deemed OK.

If they are both acceptable ACKs that advance the sender’s window, the retransmission is deemed to have been spurious.

If the transmission of new data results in the arrival of acceptable ACKs, the arrival of the new data is moving the receiver’s window forward.

If such data is only causing duplicate ACKs, there must be one or more holes at the receiver.

In either case, the reception of new data at the receiver does not harm the overall data transfer performance (provided there are sufficient buffers at the receiver).

The Eifel Response Algorithm [RFC4015] is a standard set of operations to be executed by a TCP once a retransmission has been deemed spurious.

Because the response algorithm is logically decoupled from the Eifel Detection Algorithm, it can be used with any of the detection algorithms we just discussed.

The Eifel Response Algorithm was originally intended to operate for both timer-based and fast retransmit spurious retransmissions but is currently specified only for timer-based retransmissions.

7. Packet Reordering and Duplication

In addition to packet loss, other packet delivery anomalies such as duplication and reordering can also affect TCP’s operation. In both of these cases, we wish TCP to be able to distinguish between packets that are reordered or duplicated and those that are lost.

Packet reordering can occur in an IP network because IP provides no guarantee that relative ordering between packets is maintained during delivery.

This can be beneficial (to IP at least), because IP can choose another path for traffic (e.g., that is faster) without having to worry about the consequences that doing so may cause traffic freshly injected into the network to pass ahead of older traffic, resulting in the order of packet arrivals at the receiver not matching the order of transmission at the sender.

There are other reasons packet reordering may occur. For example, some high-performance routers employ multiple parallel data paths within the hardware [BPS99], and different processing delays among packets can lead to a departure order that does not match the arrival order.

Reordering may take place in the forward path or the reverse path of a TCP connection (or in some cases both). The reordering of data segments has a somewhat different effect on TCP as does reordering of ACK packets.

Recall that because of asymmetric routing , it is frequently the case that ACKs travel along different network links (and through different routers) from data packets on the forward path.

When traffic is reordered, TCP can be affected in several ways.

If reordering takes place in the reverse (ACK) direction, it causes the sending TCP to receive some ACKs that move the window significantly forward followed by some evidently old redundant ACKs that are discarded.

This can lead to an unwanted burstiness (instantaneous high-speed sending) behavior in the sending pattern of TCP and also trouble in taking advantage of available network bandwidth, because of the behavior of TCP’s congestion control.

If reordering occurs in the forward direction, TCP may have trouble distinguishing this condition from loss.

Both loss and reordering result in the receiver receiving out-of-order packets that create holes between the next expected packet and the other packets received so far.

When reordering is moderate (e.g., two adjacent packets switch order), the situation can be handled fairly quickly.

When reorderings are more severe, TCP can be tricked into believing that data has been lost even though it has not.

This can result in spurious retransmissions, primarily from the fast retransmit algorithm.

Because a TCP receiver is supposed to immediately ACK any out-of-sequence data it receives in order to help induce fast retransmit to be triggered on packet loss, any packet that is reordered within the network causes a receiver to produce a duplicate ACK.

If fast retransmit were to be invoked whenever any duplicate ACK is received at the sender, a large number of unnecessary retransmissions would occur on network paths where a small amount of reordering is common.

To handle this situation, fast retransmit is triggered only after the duplicate threshold ( dupthresh ) has been reached.

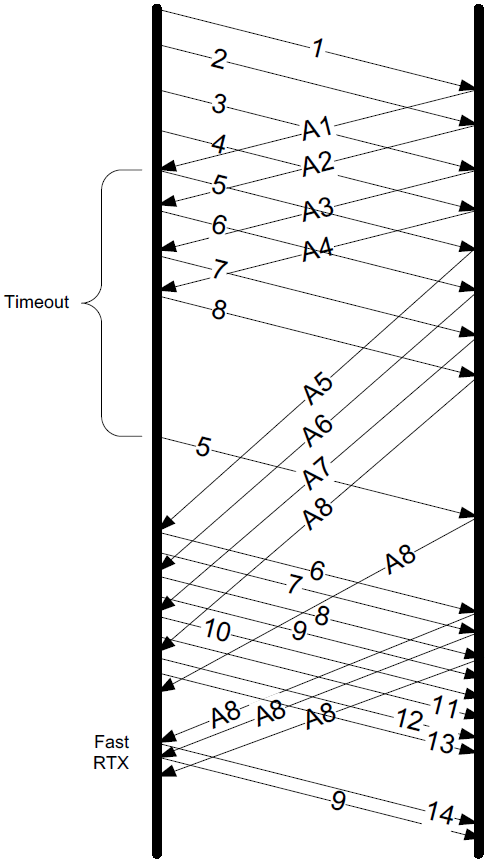

The left portion of the figure indicates how TCP behaves with light reordering, where dupthresh is set to 3.

In this case, the single duplicate ACK does not affect TCP. It is effectively ignored and TCP overcomes the reordering.

The right-hand side indicates what happens when a packet has been more severely reordered.

Because it is three positions out of sequence, three duplicate ACKs are generated. This invokes the fast retransmit procedure in the sending TCP, producing a duplicate segment at the receiver.

The problem of distinguishing loss from reordering is not trivial. Dealing with it involves trying to decide when a sender has waited long enough to try to fill apparent holes at the receiver.

Fortunately, severe reordering on the Internet is not common [J03] , so setting dupthresh to a relatively small number (such as the default of 3) handles most circumstances. That said, there are a number of research projects that modify TCP to handle more severe reordering [LLY07] . Some of these adjust dupthresh dynamically, as does the Linux TCP implementation.

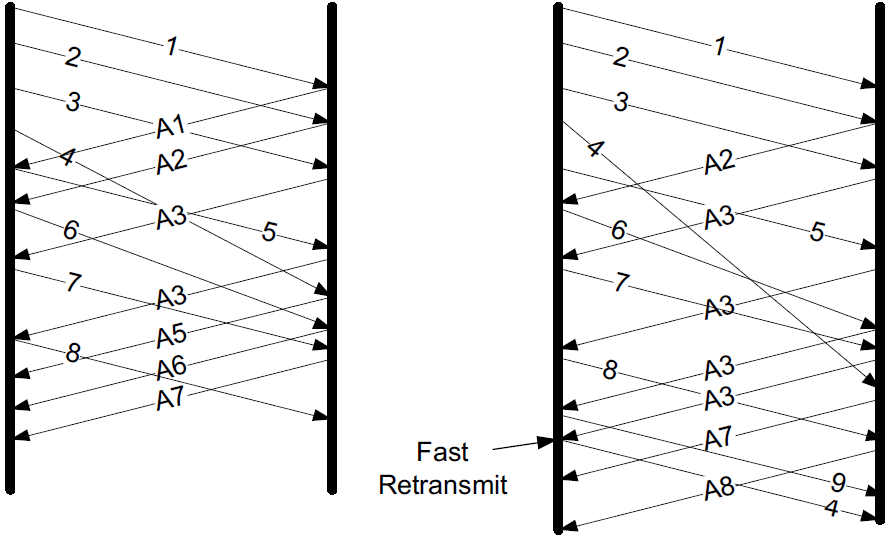

Although rare, the IP protocol may deliver a single packet more than one time. This can happen, for example, when a link-layer network protocol performs a retransmission and creates two copies of the same packet.

The effect of packet 3 being duplicated is to produce a series of duplicate ACKs from the receiver. This is enough to trigger a spurious fast retransmit, as the non-SACK sender may mistakenly believe that packets 5 and 6 have arrived earlier. With SACK (and DSACK, in particular) this is more easily diagnosed at the sender.

With DSACK, each of the duplicate ACKs for A3 contains DSACK information that segment 3 has already been received. Furthermore, none of them contains an indication of any out-of-order data, meaning the arriving packets (or their ACKs) must have been duplicates. TCP can often suppress spurious retransmissions in such cases.

Newer TCP implementations maintain many of the metrics such as srtt , rttvar and so on. in a routing or forwarding table entry or other systemwide data structure that exists even after TCP connections are closed.

When a new connection is created, TCP consults the data structure to see if there is any preexisting information regarding the path to the destination host with which it will be communicating.

If so, initial values for srtt , rttvar , and so on can be initialized to some value based on previous, relatively recent experience.

When a TCP connection closes down, it has the opportunity to update the statistics. This can be accomplished by replacing the existing statistics or updating them in some other way.

In the case of Linux 2.6, the values are updated to be the maximum of the existing values and those measured by the most recent TCP. These values can be inspected using the ip program available from the iproute2 suite of tools:

When TCP times out and retransmits, it does not have to retransmit the identical segment. Instead, TCP is allowed to perform repacketization , sending a bigger segment, which can increase performance. Naturally, this bigger segment cannot exceed the MSS announced by the receiver and should not exceed the path MTU.

This is allowed in the protocol because TCP identifies the data being sent and acknowledged by its byte number, not its segment (or packet) number.

TCP’s ability to retransmit a segment with a different size from the original segment provides another way of addressing the retransmission ambiguity problem. This has been the basis of an idea called STODER [TZZ05] that uses repacketization to detect spurious timeouts.

We can easily see repacketization in action. We use our nc program as a server and connect to it with telnet .

First we type the line hello there .

This produces a segment of 13 data bytes, including the carriage-return and newline characters produced when the Enter key is pressed.

We then disconnect the network and type line number 2 (14 bytes, including the newline).

We then wait about 45s, type and 3 , terminate the connection, and reconnect the network again:

Appendix A: Dropping Packets in Linux using tc and iptables

There are two simple ways to randomly drop packets on a Linux computer: using tc , the program dedicated for controlling traffic; and using iptables , the built-in firewall. [NETEM] [IPTABLES] [EBADNET] [DPLTC]

tc controls the transmit queues of your kernel. Normally when applications on your computer generate data to send, the data is passed to your kernel (via TCP and IP) for transmission on the network interface. The packets are transmitted in a first-in-first-out (FIFO) order.

tc allows you to change the queuing mechanisms (e.g. giving priority to specific type of packets), as well as emulate links by delaying and dropping packets.

Here we will use tc to drop packets. Because tc controls the transmit queues, we use it on a source computer (normally tc doesn’t impact on what is received by your computer, but there are exceptions).

netem is a special type of queuing discipline used for emulating networks. The above command tells the Linux kernel to drop on average 25% of the packets in the transmit queue. You can use different values of loss (e.g. 10%).

When using tc you can show the current queue disciplines using:

To show that it works, lets run an PING test. On computer node-1 (the computer where tc is NOT used) run:

To delete the above queue discipline use the delete command instead of replace :

iptables allows you to create rules that specify how packets coming into your computer and going out of your computer are treated (and for routers, also forwarded by the router). The rules for packets coming in are in the INPUT chain, packets going out are OUTPUT, and packets forwarded are in the FORWARD chain. We will only use the INPUT chain.

The rules can filter packets based on common packet identifiers (IP addresses, ports, protocol numbers) as well as other matching criteria. We will use a special statistic matching module. For each packet that matches the filter, some action is applied (e.g. DROP the packet, ACCEPT the packet, or some more complex operation).

On computer node-1 (the destination), to view the current set of rules:

There are no rules in either of the three chains. Note that the default policy (if a packet does not match any rule) is to ACCEPT packets.

Now to add a rule to the INPUT chain to drop 25% of incoming packets on computer node-1 :

To demonstrate the packet dropping, run another PING test on the source node-0 :

Returning to computer node-1 , to delete a rule you can use the -D option:

(or you can refer to rules by number, e.g. iptables -D INPUT 1 to delete rule 1 from the INPUT chain).

Alternatively we can specify to drop every n packets, starting from packet p . And we can combine with the standard filtering mechanisms of firewalls to only drop packets belong to a particular source/destination pair or application.

This rule should drop packet 3, 7, 11, … for only one of the connections (with destination port 6666).

Here is the output of an iperf3 test at the source node-0 . There are 25% packets dropped by the destination (receiver).

[TCPIP1] Kevin Fall, W. Stevens TCP/IP Illustrated: The Protocols, Volume 1 . 2nd edition, Addison-Wesley Professional, 2011

[J88] V. Jacobson, Congestion Avoidance and Control , See https://ee.lbl.gov/papers/congavoid.pdf

[NETEM] https://wiki.linuxfoundation.org/networking/netem

[IPTABLES] Using iptables [online]. https://www.netfilter.org/documentation/HOWTO/packet-filtering-HOWTO-7.html

[EBADNET] Emulating Bad Networks [online]. https://samwho.dev/blog/emulating-bad-networks/

[DPLTC] Dropping Packets in Ubuntu Linux using tc and iptables [online]. https://sandilands.info/sgordon/dropping-packets-in-ubuntu-linux-using-tc-and-iptables

[RFC1122] R. Braden, ed., Requirements for Internet Hosts—Communication Layers , Internet RFC 1122/STD 0003, Oct. 1989, See https://www.rfc-editor.org/rfc/rfc1112

[RFC2018] M. Mathis, J. Mahdavi, S. Floyd, and A. Romanow, TCP Selective Acknowledgment Options , Internet RFC 2018, Oct. 1996, See https://www.rfc-editor.org/rfc/rfc2018

[RFC2883] S. Floyd, J. Mahdavi, M. Mathis, and M. Podolsky, An Extension to the Selective Acknowledgement (SACK) Option for TCP , Internet RFC 2883, July 2000, See https://www.rfc-editor.org/rfc/rfc2883

[RFC3517] E. Blanton, M. Allman, K. Fall, and L. Wang, A Conservative Selective Acknowledgment (SACK)-Based Loss Recovery Algorithm for TCP , Internet RFC 3517, Apr. 2003, See https://www.rfc-editor.org/rfc/rfc3517

[RFC3522] R. Ludwig and M. Meyer, The Eifel Detection Algorithm for TCP , Internet RFC 3522 (experimental), Apr. 2003.

[RFC5681] M. Allman, V. Paxson, E. Blanton, TCP Congestion Control , Internet RFC 5681, Sept. 2009, See https://www.rfc-editor.org/rfc/rfc5681

[RFC5682] P. Sarolahti, M. Kojo, K. Yamamoto, and M. Hata, Forward RTORecovery (F-RTO): An Algorithm for Detecting Spurious Retransmission Timeouts with TCP , Internet RFC 5682, Sept. 2009.

[RFC6298] V. Paxson, M. Allman, and J. Chu, Computing TCP’s Retransmission Timer , Internet RFC 6298, June 2011, See https://www.rfc-editor.org/rfc/rfc6298

[J03] S. Jaiswal et al. Measurement and Classification of Out-of-Sequence Packets in a Tier-1 IP Backbone , Proc. IEEE INFOCOM, Apr. 2003.

[LLY07] K. Leung, V. Li, and D. Yang "An Overview of Packet Reordering in Transmission Control Protocol (TCP): Problems, Solutions and Challenges". IEEE Trans. Parallel and Distributed Systems , 18(4), Apr. 2007.

[TZZ05] K. Tan and Q. Zhang STODER: A Robust and Efficient Algorithm for Handling Spurious Timeouts in TCP . Proc. IEEE Globecomm, Dec. 2005.

- « TCP/IP: TCP Connection Management

- TCP/IP: TCP Data Flow and Window Management »

IMAGES

VIDEO

COMMENTS

Three way handshake: Step 1: client host sends TCP SYN segment to server. specifies initial seq #. no data. Step 2: server host receives SYN, replies with SYNACK segment. server allocates buffers. specifies server initial seq. # Step 3:client receives SYNACK, replies with ACK segment, which may contain data.

When TCP sends a segment the timer starts and stops when the acknowledgment is received. If the timer expires timeout occurs and the segment is retransmitted. RTO (retransmission timeout is for 1 RTT) to calculate retransmission timeout we first need to calculate the RTT(round trip time). RTT three types -

Computing TCP's RTT and timeout values. Suppose that TCP's current estimated values for the round trip time (estimatedRTT) and deviation in the RTT (DevRTT) are 290 msec and 16 msec, respectively (see Section 3.5.3 for a discussion of these variables).Suppose that the next three measured values of the RTT are 380 msec, 250 msec, and 260 msec respectively.

21.3 Round-Trip Time Measurement. Fundamental to TCP's timeout and retransmission is the measurement of the round-trip time (RTT) experienced on a given connection. We expect this can change over time, as routes might change and as network traffic changes, and TCP should track these changes and modify its timeout accordingly.

RFC 6298 Computing TCP's Retransmission Timer June 2011 The rules governing the computation of SRTT, RTTVAR, and RTO are as follows: (2.1) Until a round-trip time (RTT) measurement has been made for a segment sent between the sender and receiver, the sender SHOULD set RTO <- 1 second, though the "backing off" on repeated retransmission discussed in (5.5) still applies.

Determining the base retransmission timeout (RTO) value We need a dynamic procedure that takes into account current congestion The original algorithm M = measured value of last ack's RTT delay. R = averaged round trip time R = aR + (1 - a) M (with a recommended to be 0.9) RTO = Rb (b = a variance fudge factor recommended to be 2)

The rules governing the computation of SRTT, RTTVAR, and RTO are as follows: (2.1) Until a round-trip time (RTT) measurement has been made for a segment sent between the sender and receiver, the sender SHOULD set RTO <- 3 seconds (per RFC 1122 ), though the "backing off" on repeated retransmission discussed in (5.5) still applies. Note that ...

A RTT (Round Trip Time) in TCP terms simply defines how long to takes for a client to make a request for example when a sender sends a SYN and then recieve an SYN ACK back from the receiver. ... A RTO (Retransmission Timeout): When TCP packets are sent to their destination a retransmission timer begins to count down - if this timer expires ...

TCP Round Trip Time and Timeout EstimatedRTT = (1-α)*EstimatedRTT + α*SampleRTT Exponential weighted moving average influence of past sample decreases exponentially fast typical value: α=0.125 Example RTT estimation: RTT: gaia.cs.umass.edu to fantasia.eurecom.fr 100 150 200 250 300 350 1 8 15 22 29 36 43 50 57 64 71 78 85 92 99 106 time ...

TCP round trip time, timeout (typically, b= 0.25) TimeoutInterval = EstimatedRTT + 4*DevRTT estimated RTT safety margin 11 TCP sender events: Managing a single timer data rcvd from app: §create segment with seq # §seq# is byte-stream number of first data byte in segment

Initial RTT is the round trip time that is determined by looking at the TCP Three Way Handshake. It is good to know the base latency of the connection, and the packets of the handshake are very small. This means that they have a good chance of getting through at maximum speed, because larger packets are often buffered somewhere before being ...

There's no fixed time for retransmission. Simple implementations estimate the RTT (round-trip-time) and if no ACK to send data has been received in 2x that time then they re-send. They then double the wait-time and re-send once more if again there is no reply. Rinse. Repeat.

Keep estimate of round trip time on each connection Use current estimate to set retransmission timer Known as adaptive retransmission Key to TCP's success 36 TCP Round Trip Time & Timeout •Q:how to set TCP timeout value? • longer than RTT - note: RTT will vary • too short: premature timeout - unnecessary retransmissions • too long ...

Fundamental to TCP's timeout and retransmission procedures is how to set the RTO based upon measurement of the RTT experienced on a given connection. ... Because no SACKs are being used, the sender can learn of at most one receiver hole per round-trip time, indicated by the increase in the ACK number of returning packets, which can only occur ...

Suppose that TCP's current estimated values for the round trip time ... 400, and 320 respectively. Compute TCP's new value of estimatedRTT, DevRTT, and the TCP timeout value after each of these three measured RTT ... The way it takes RTT samples is by using a timer to meassure the time it takes for a packet to be sent and the acknowledgment for ...

1. Timing is implicit in TCP (RTP, as its name suggests, relates explicitly to timing). RTT is calculated by Wireshark on packets that have ACKs of past segments, and is calculated as the time delta between the original packet's SEQ and this packet's ACK. Since it is calculated, you will see it under [SEQ/ACK analysis] of the packet and not as ...

other side eventually time out as well. ... Best current estimate for round-trip time If ACK takes Rsec < timer expiration, SRTT = αSRTT + (1- α) R αis a smoothing factor Typically α= 7/8. Example If the TCP round-trip time, RTT, is currently 30 msec and the following acknowledgements come in after 26, 32, and 24

Consider what happens when TCP sends a segment after a sharp increase in delay. Using the prior round-trip time estimate, TCP computes a timeout and retransmits a segment. If TCP ignores the round-trip time of all retransmitted packets, the round trip estimate will never be updated, and TCP will continue retransmitting every segment, never ...

TCP continuously estimates the current Round-Trip Time (RTT) of every active connection in order to find a suitable value for the retransmission time-out. We have implemented the RTT estimation using TCP's periodic timer. Each time the periodic timer fires, it increments a counter for each connection that has unacknowledged data in the network.

To list the currently open TCP ( t option) sockets and associated internal TCP info ( i) information - including congestion control algorithm, rtt, cwnd etc: Here's some example output: cubic wscale:6,7 rto:201 rtt:0.242/0.055 ato:40 mss:1448 rcvmss:1392. ss documentation does not have any of those fields.

TCP uses an estimate of the Round Trip Time (RTT) to guess when a packet has to be received by the other end. If that timer exceeds, the packet is assumed lost and retransmitted. In TCP Reno the RTT is computed using a coarse-grained timer. This means that, say, every 500ms it is checked whether some sent packet is received or not.